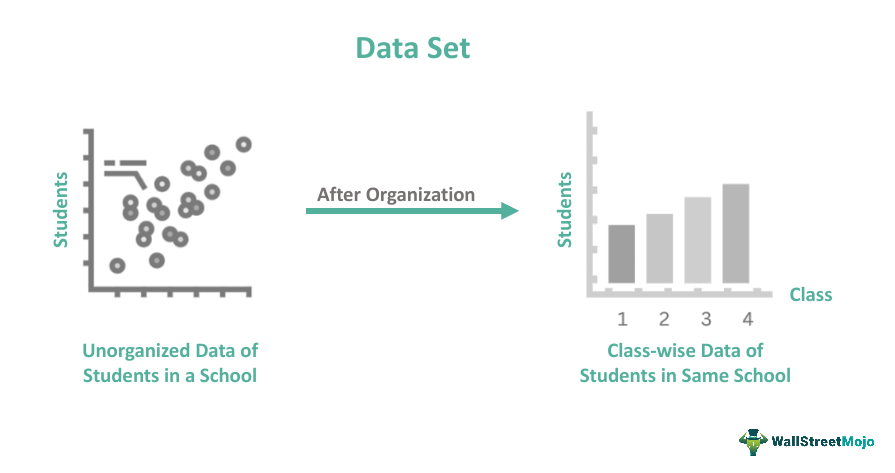

For this example, we want to load data from the local filesystem to timescale. »> from datasets import load_dataset »> data_files= {train: · the dlt cli has a useful command to get you started with any combination of source and destination. · i try to load a dataset using the datasets python module in my local python notebook. You can store data in formats like jsonl, parquet, or csv. This means you can reload the dataset from the cache and use it offline. Based on the error message, it is likely that there is a version mismatch with fsspec. You can run the following commands to create a starting point for loading data from the local filesystem to postgresql: · the dlt library provides a convenient way to load data from the local filesystem. · im facing a notimplementederror: Loading a dataset cached in a localfilesystem is not supported i hope you found a solution that worked for you :) the content (except music & images) is licensed under. Loading a dataset cached in a localfilesystem is not supported” error while working with hugging face’s datasets, you can follow our end to end steps to solve the error. You can run the following commands to create a starting point for loading data from the local filesystem to timescale: Whenever you get “notimplementederror: This documentation will guide you on how to load data from postgresql to the local filesystem using the open-source python library called dlt. The the local filesystem destination allows you to store data in a local folder, enabling the creation of datalakes. · when i used the datasets==1. 11. 0, its all right. Util update the latest version, it get the error like this: For this example, we want to load data from the local filesystem to postgresql. · as long as you’ve downloaded a dataset from the hub or 🤗 datasets github repository before, it should be cached. Try loading the original dataset (before caching) to rule out corruption in the … When modifying a compressed file in a local folder load_dataset doesnt detect the change and load the previous version. · cache problem in the load_dataset method: · i need to uncompress files in s3 so need to copy files to local file system. The dlt cli has a useful command to get you started with any combination of source and destination. Ive tried restarting the runtime and setting a custom cache_dir, but the issue. When i use dbutils. fs. cp (dbfs_file, local_file) with dbfs file as s3://path_to_file or dbfs://path_to_file … The cached dataset itself might be corrupted, preventing loading. · ive tried restarting the runtime and setting a custom cache_dir, but the issue. I am running a python 3. 10. 13 kernel as i do for my virtual environment. Loading a dataset cached in a localfilesystem is not supported error in google colab when loading the dataset osondu/reddit_autism_dataset. This verified source streams csv, parquet, and jsonl files from the local filesystem using … · dataset corruption:

Dataset Loading Broken The Local Filesystem Fix You Need

For this example, we want to load data from the local filesystem to timescale. >>> from datasets import load_dataset >>> data_files= {train: · the dlt...